One of the interesting things about working in the field of business strategy is that the most inspiring thoughts relevant for one’s work are usually not found in textbooks. Dealing with spaceflight, science fiction and fringe technology, the io9 portal is about as geeky as they come and can hardly be considered typical manager’s reading. It does, however, contain fascinating ideas like this one: How Bayes’ Rule Can Make You A Better Thinker. Being a better thinker is always useful in strategy, and the article points out a number of interesting thoughts about how what we believe to be true is always uncertain to a degree and about the fallacies resulting from that. Unfortunately, it just very briefly skips over what Bayes’ Theorem actually says, although that’s really not that difficult to understand and can be quite useful, for example in dealing with things like warning signs or early indicators.

Obviously, Bayes’ Theorem as a mathematical equation is based on probabilities, and we have pointed out before that in management, as in many other aspects of real life, most probabilities we deal with can only be guessed. We’re not even good at guessing them. Still, the theorem has relevance even on the level of rough estimates that we have in most strategic questions, and in cases like our following example, they can fairly reasonably be inferred from previous market developments. Bayes’ Theorem can actually save you from spending money on strategy consultants like me, which should be sufficient to get most corporate employees’ attention.

So, here is the equation in its simplest form and the only equation you will see in this post, with P(A) being the probability that A ist true and P(A l B) being the probability that A is true under the assumption that B is true. In case you actually want more math, the Wikipedia article is a good starting point. In case that’s already plenty of math, hang in there; the example is just around the corner. And in case you don’t want a business strategy example at all, the very same reasoning applies to testing for rare medical conditions. This equation really fits for a lot of cases where we use one factor to predict another:

What does that mean? Suppose we have an investment to make, maybe a product that we want to introduce into the market. If we’re in the pharmaceutical business, it may be a drug we’re developing; it can also be a consumer product, an industrial service, a financial security we’re buying or real estate we want to develop. It may even be your personal retirement plan. The regular homework has been done, ideally, you have developed and evaluated scenarios, and assuming the usual ups and downs, the investment looks profitable.

However, we know that our investment has a small but relevant risk of catastrophic failure. Our company may be sued; the product may have to be taken off the market; your retirement plan may be based on Lehman Brothers certificates. Based on historical market data or any other educated way of guessing, we estimate that probability to be on the order of 5%.

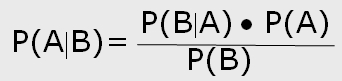

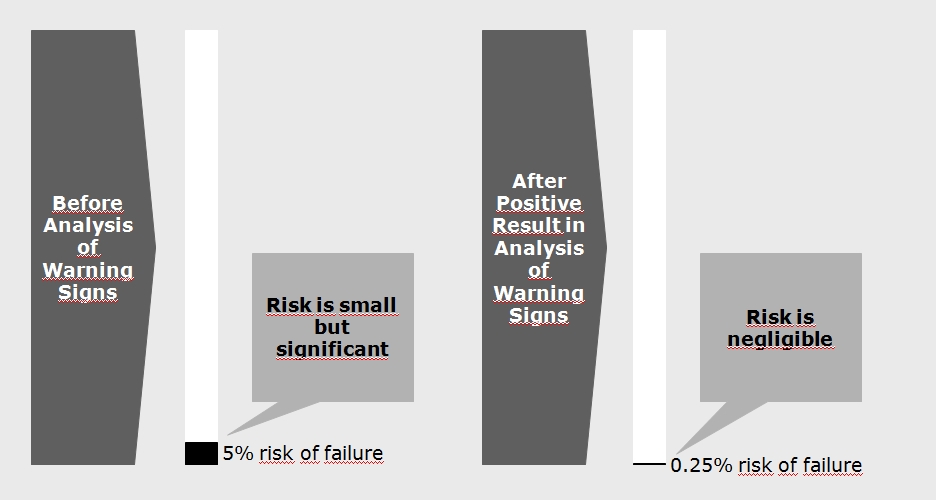

That is not a pleasant risk to have in the back of your head, but help is on the way – or so it seems. There will be warning signs, and we can invest time and money to do a careful analysis of these warning signs, for example do a further clinical trial with the drug, hire a legal expert, market researcher or management consultant, and that analysis will predict the catastrophic failure with an accuracy of 95%. A rare event with a 5% probability being predicted with a 95% probability means that the remaining risk of running into that failure without preparation is, theoretically, 0.25%, or, at any rate, very, very small. The analysis of warning signs will predict a failure in 25% of all cases, so there will be false warnings, but they are acceptable given the (almost) certainty gained, right? Well, not necessarily. At first glance, the situation looks like this:

If we have plenty of alternatives and are just using our analysis to weed out the most dangerous ones, that should do the job. However, if the only option is to do or not do the investment and our analysis predicts a failure, what have we really learned? Here is where Bayes’ Theorem comes in. The probability of failure, P(A), is 5%, and the probability of the analysis giving a warning, P(B), is 25%. If a failure is going to occur, the probability of the analysis predicting it correctly, P(B l A), is 95%. Enter the numbers – if the analysis leads to a warning of failure, the probability of that failure actually occurring, P(A l B), is still only 19%. So, remembering that all our probabilities are, ultimately, guesses, all we now know is that the negative result of our careful and potentially costly analysis has changed the risk of failure from “small but significant” to “still quite small”.

If the investment in question is the only potential new product in our pipeline or the one piece of real estate we are offered or the one pension plan our company will subsidize, such a result of our analysis will probably not change our decision at all. We will merely feel more or less wary about the investment depending on the outcome of the analysis, which raises the question, is that worth doing it? As a strategy consultant, I am fortunate to be able to say that companies will generally just spend only about a permill of an investment amount on consultants for the strategic evaluation of that investment. Considering that a careful strategic analysis should give you insights beyond just the stop or go of an investment, that is probably still money well spent. On the other hand, additional clinical trials, prototypes or test markets beyond what has to be done anyway, just to follow up on a perceived risk, can cost serious money. So, unless there are other (for example ethical) reasons to try to be prepared, it is well worth asking if such an analysis will really change your decisions.

Of course, if the indicators analyzed are more closely related to the output parameters and if the probability of a warning is closer to the actual risk to be predicted, the numbers can look much more favorable for an analysis of warning signs. Still, before doing an analysis, it should always be verified that the output will actually make a difference for the decisions to be made, and even if the precise probabilities are unknown, at least the idea of Bayes’ Theorem can help to do that. Never forget the false positives.

Dr. Holm Gero Hümmler

Uncertainty Managers Consulting GmbH