Because of the local relevance, this article is first published in German.

Dr. Holm Gero Hümmler ist Diplom-Physiker und Diplom-Wirtschaftsphysiker und beschäftigt sich seit mehr als 15 Jahren als Unternehnemsberater mit Fragen der Planung unter Zukunftsunsicherheit, überwiegend in größeren Untenrehmen. Von 1993 bis 1997 war er Stadtverordneter und Mitglied des Sozialausschusses der Stadt Nidderau.

Auf den ersten Blick hat die Planung einer Konzernstrategie relativ wenig mit kommunaler Kinderbetreuung zu tun: Im einen Fall geht es um ein gewinnorientiertes Unternehmen, das relativ frei über seine Geschäftsfelder entscheiden und sich gegebenenfalls auch vollständig von einzelnen Geschäften treffen kann, im zweiten Fall um eine kommunale Leistung, die teils tatsächlich Pflichtaufgabe ist, teils zumindest von den betroffenen Bürgern erwartet wird. Von Gewinnorientierung kann hier keine Rede sein, handelt es sich doch um eine der wenigen Dienstleistungen von Kommunen, bei denen Gebühren, sofern sie überhaupt noch erhoben werden, nicht kostendeckend sein können. In größeren Verwaltungseinheiten werden zwar inzwischen auch im Sozialbereich zum Teil strategische Ziele in Form von Mission, Vision oder Leitbild formuliert, aber allzu unternehmerisches Denken ist doch in vielen Bereichen weder realistisch noch gewünscht.

Eine Planungsaufgabe aus der Konzernstrategie findet sich jedoch tatsächlich fast identisch in der Kinderbetreuung praktisch jeder Kommune wieder: die Kapazitätsplanung. Auch die dabei auftretenden Herausforderungen sind bemerkenswert ähnlich:

- Zukünftige Bedarfsmengen sind ähnlich wie bei einem im Markt etablierten Produkt grundsätzlich absehbar, aber mit spürbaren Unsicherheiten behaftet.

- Der Aufbau zusätzlicher Kapazitäten stellt für die Gesamtorganisation eine Investition in erheblicher Größenordnung dar.

- Auch bei vorhandenen Mitteln lassen sich Kapazitäten nicht beliebig schnell auf- oder abbauen, weder auf der Personal-, noch auf der Immobilienseite.

- Flexible Kapazitäten, zum Beispiel in Containern oder durch Bustransport in andere Ortsteile, haben langfristig ihren Preis, einerseits hinsichtlich der Kosten, aber auch bezüglich der gebotenen Qualität.

Die Zukunftsunsicherheiten erscheinen in der Kinderbetreuung zunächst überschaubarer, weil Aktivitäten von Wettbewerbern als Unsicherheitsfaktor weitgehend entfallen. Private Betreuungsangebote werden in vielen Fällen eher als Entlastung denn als Wettbewerb angesehen, und größere private oder kirchliche Kindertagesstätten sind den Kommunen planungsrechtlich zumindest bekannt und in der Regel auch abgestimmt. Signifikante Unsicherheiten in der zukünftigen Angebotsentwicklung dürfte es somit lediglich bei Tagesmüttern und gegebenenfalls privaten Kindervereinen geben. Hinzu kommen unter Umständen Unsicherheiten seitens des Bundeslandes oder des Landkreises als Schulträger in der Einführung von Ganztagsangeboten an Schulen, die sich massiv auf den zukünftigen Bedarf an Hortplätzen auswirken.

Bedarfsseitig gibt die Einwohnermeldestatistik eine gute Ausgangsbasis für die Planung, aber die Unsicherheiten sollten nicht unterschätzt werden. Gerade beim wachsenden Thema der Kleinkindbetreuung ist die Vorlaufzeit zwischen Geburtenentwicklung und Betreuungsbedarf kurz. Zudem können gesellschaftliche Entwicklungen die Nachfrage nach Ganztagesplätzen im Kindergarten, Kleinkindbetreuung oder Hortplätzen deutlich stärker schwanken lassen als nach der schon jetzt praktisch flächendeckend wahrgenommenen Betreuung der Drei- bis Fünfjährigen.

Ein gutes Kinderbetreuungsangebot entwickelt sich auch zunehmend zum Standortfaktor. Dies gilt zum einen in der Ansiedelung von Unternehmen, für die das Betreuungsangebot in der Nähe selbst ein wichtiger Faktor im Wettbewerb um geeignete Mitarbeiter ist. Nur wenige große Unternehmen haben die Möglichkeit, hier selbst aktiv zu werden – Mittelständler sind in der Regel auf ein gutes kommunales Angebot angewiesen. Im ländlichen Bereich, aber auch für die einzelnen Regionen innerhalb eines Ballungsraums, ist ein gutes Kinderbetreuungsangebot auch ein Schlüssel zu einer langfristig stabilen, gut durchmischten Demographie. Neben bezahlbarem Wohnraum ist das Betreuungsangebot der wohl wichtigste Standortfaktor für junge Familien.

Kommunen haben also auch außerhalb ihrer verpflichtenden Aufgaben ein deutliches Interesse daran, ein gutes Betreuungsangebot sicherzustellen, müssen gleichzeitig aber gerade angesichts der dort besonders unsicheren Nachfrage vorsichtig die benötigten Kapazitäten planen, um sorgsam mit Steuergeldern umzugehen. Wie aber kann eine solche Planung die vorhandenen Unsicherheiten angemessen berücksichtigen?

Genau für diese Art von Unsicherheiten gibt es Planungsansätze, die sich in der Unternehmensplanung bewährt haben. Dabei nutzt man die Tatsache, dass die tatsächliche zukünftige Entwicklung zwar nicht vorhersagbar ist, der realistische Spielraum möglicher Entwicklungen sich bei Planungsproblemen dieser Art jedoch in der Regel recht gut eingrenzen lässt. So folgt auf einen Anstieg in der Zahl der Kleinkinder in einer Kommune mit hoher Sicherheit mit zwei bis drei Jahren Verzögerung ein Anstieg bei den Kindergartenkindern. Ebenso wird sich die Zahl betreuungsbedürftiger Kinder nicht innerhalb kurzer Zeit verdoppeln – es sei denn, im betreffenden Ortsteil würde gerade massiv neu entstehender Wohnraum von jungen Familien bezogen.

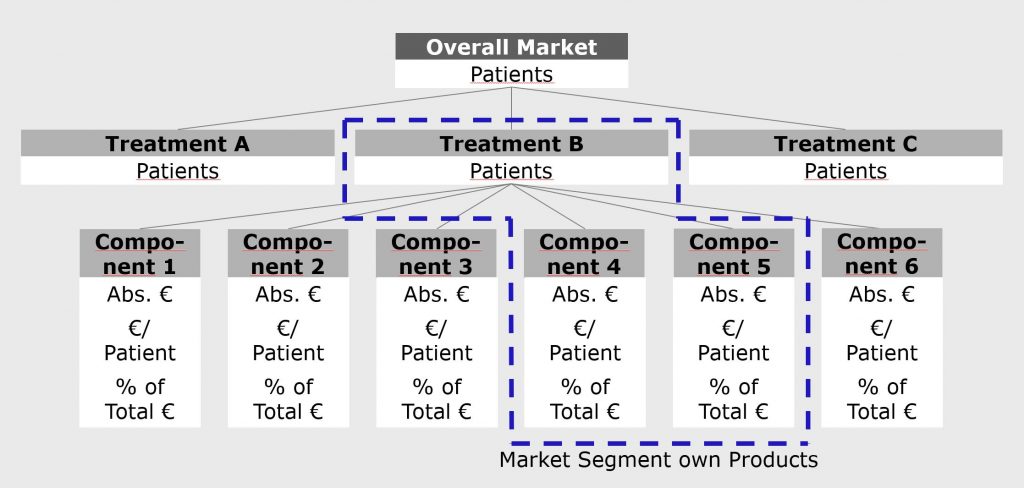

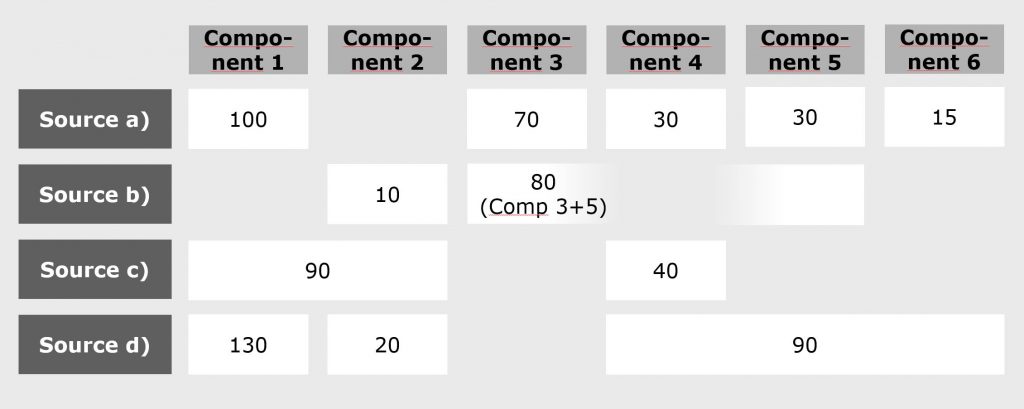

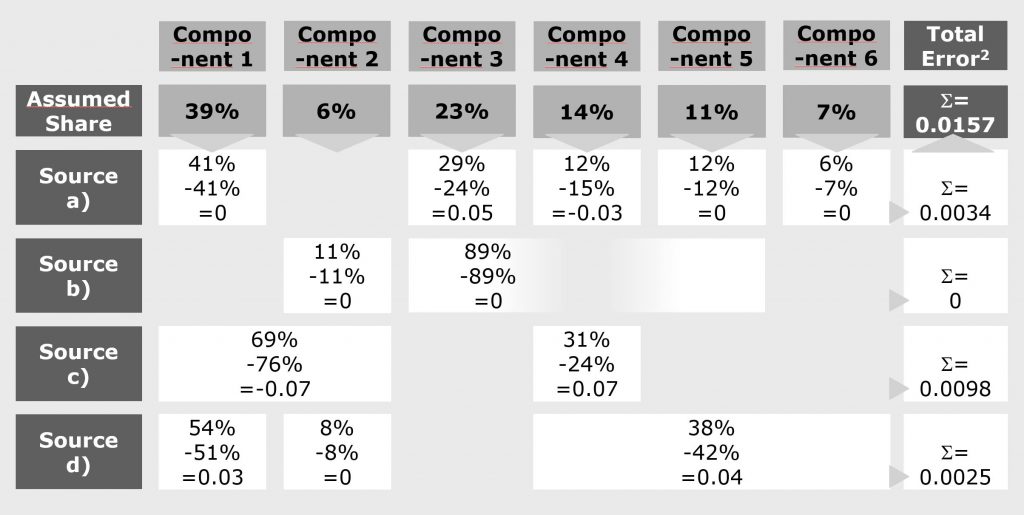

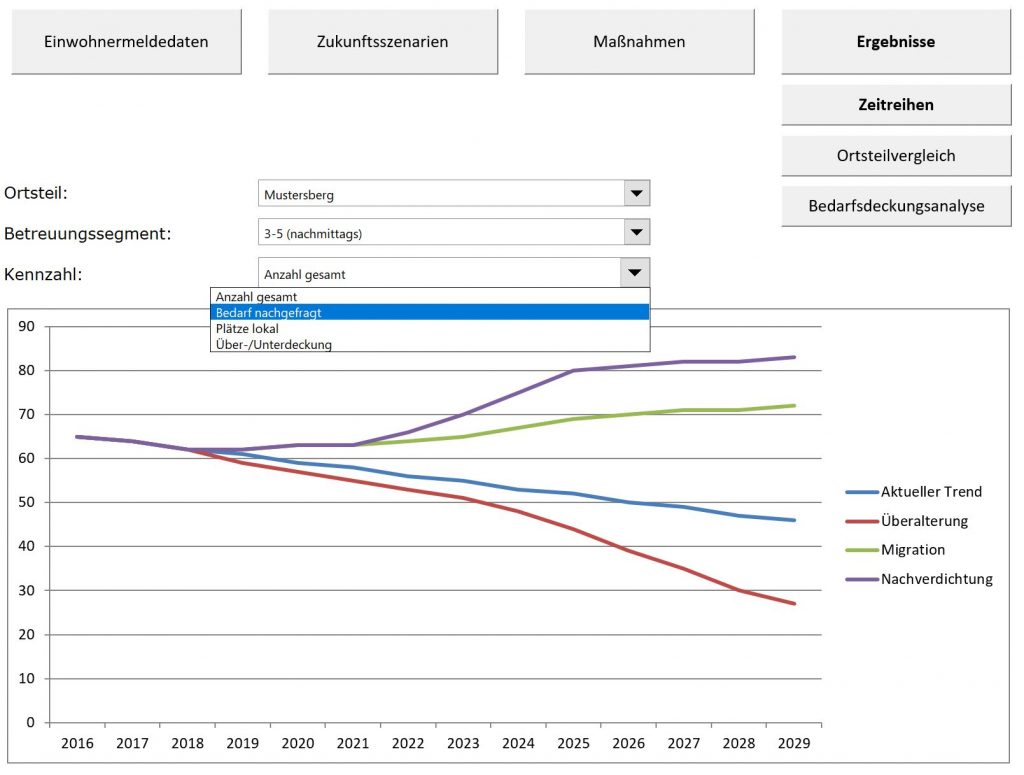

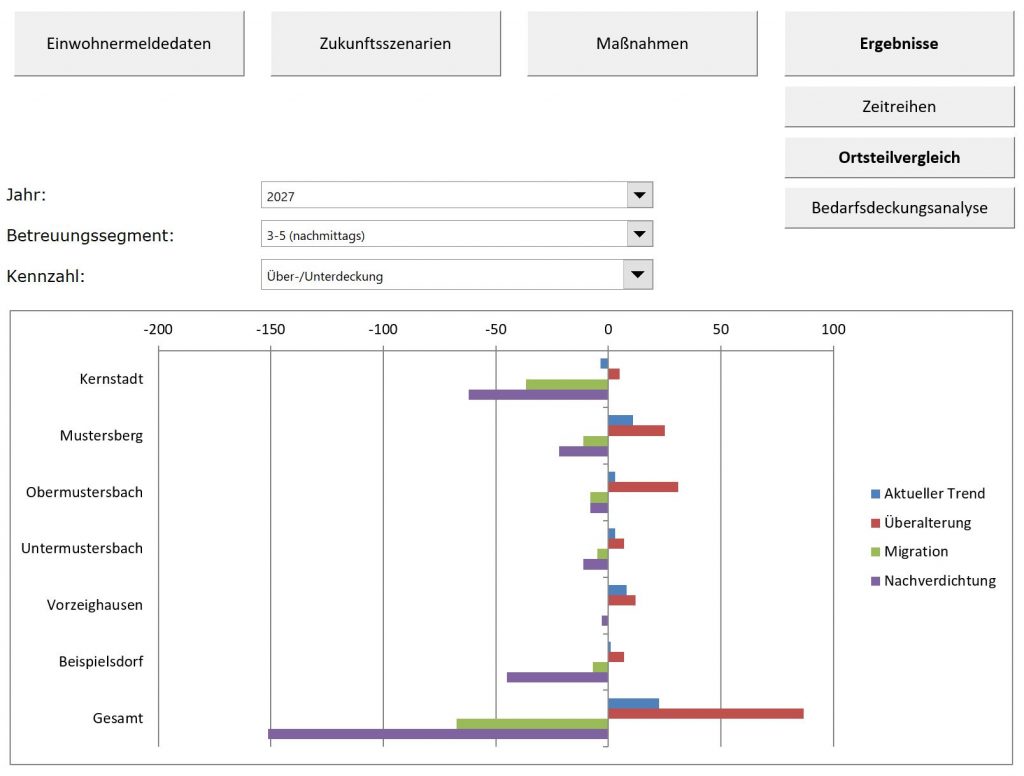

Die Szenarioplanung bildet Unsicherheiten in Form konsistenter, in sich plausibler aber gegenseiteig klar unterscheidbarer Zukunftsverläufe ab. Hierzu werden zunächst die Parameter zusammengestellt, hinsichlich derer sich zukünftige Entwicklungen unterscheiden können (z.B. Zu-/Wegzüge junger Familien, Geburtenzahl, Anteil Kleinkinder mit Betreuungsbedarf…). Anschließend werden für jeden Parameter unterschiedliche Zukunftsentwicklungen skizziert und diese schließlich zu konsistenten Szenarien zusammengeführt, innerhalb derer für alle Parameter jeweils eine Entwicklung beschrieben wird. Die Szenarien sollen unterschiedliche Pfade durch den Raum möglicher Zukunftsentwicklungen aufzeigen und dabei möglichst auch die Grenzen des Realistischen berühren.

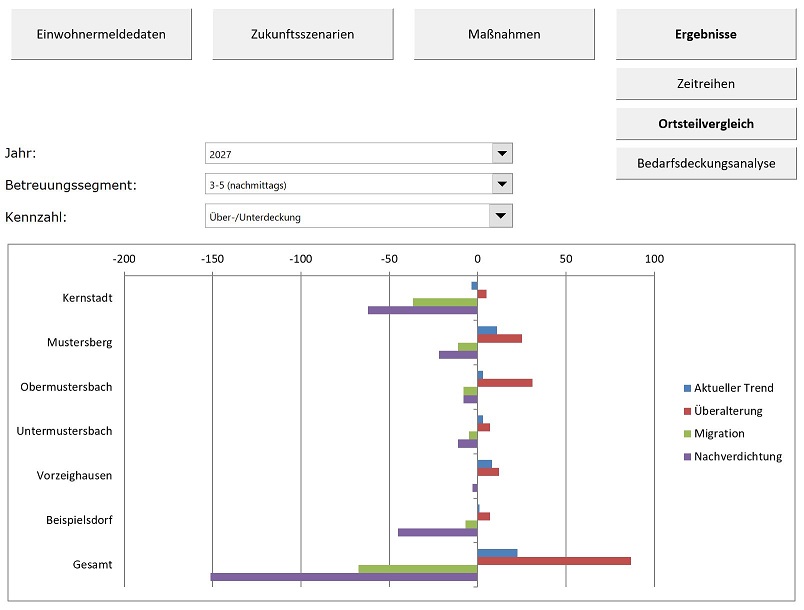

In ihrer klassischen Form ist die Szenarioplanung ein rein qualitatives Instrument, das keine Planzahlen liefern soll. Der Ansatz lässt sich jedoch problemlos auf eine quantitative Planung übertragen, wenn die Parameter und ihre Ausprägungen entsprechend in Zahlen formuliert werden. Hierbei ist je nach Fragestellung entweder eine zeitpunktsbezogene Quantifizierung der Szenarien oder eine Modellierung von Zeitverläufen möglich.

Da die Szenarien nur einzelne, beispielhafte Entwicklungen aufzeigen, kann eine interaktive Modellierung die Möglichkeit bieten, Zusammenhänge durchzuspielen und das Zusammenspiel der in der Szenarioplanung entwickelten möglichen Zukunftsentwicklungen mit denkbaren eigenen Maßnahmen zu testen. Darüber hinaus ist es im Modell möglich, die Annahmen der Szenarien direkt zu variieren. So lassen sich Fragen der folgenden Form beantworten: Unter welchen Voraussetzungen reichen die geplanten Kapazitäten bis zum Jahr X aus? Bis wann muss eine Entscheidung zwischen Erweiterung und Neubau eines Kindergartens erfolgt sein? Wann würde eine abweichende Entwicklung erstmals zu spürbaren Wartelisten für Betreuungsplätze führen, und wäre dann noch Zeit zum Gegensteuern? Lohnen sich die Schaffung vielseitig nutzbarer Räumlichkeiten oder ein Provisorium, oder ist eine dauerhafte Auslastung einer neuen Tagesstätte absehbar?

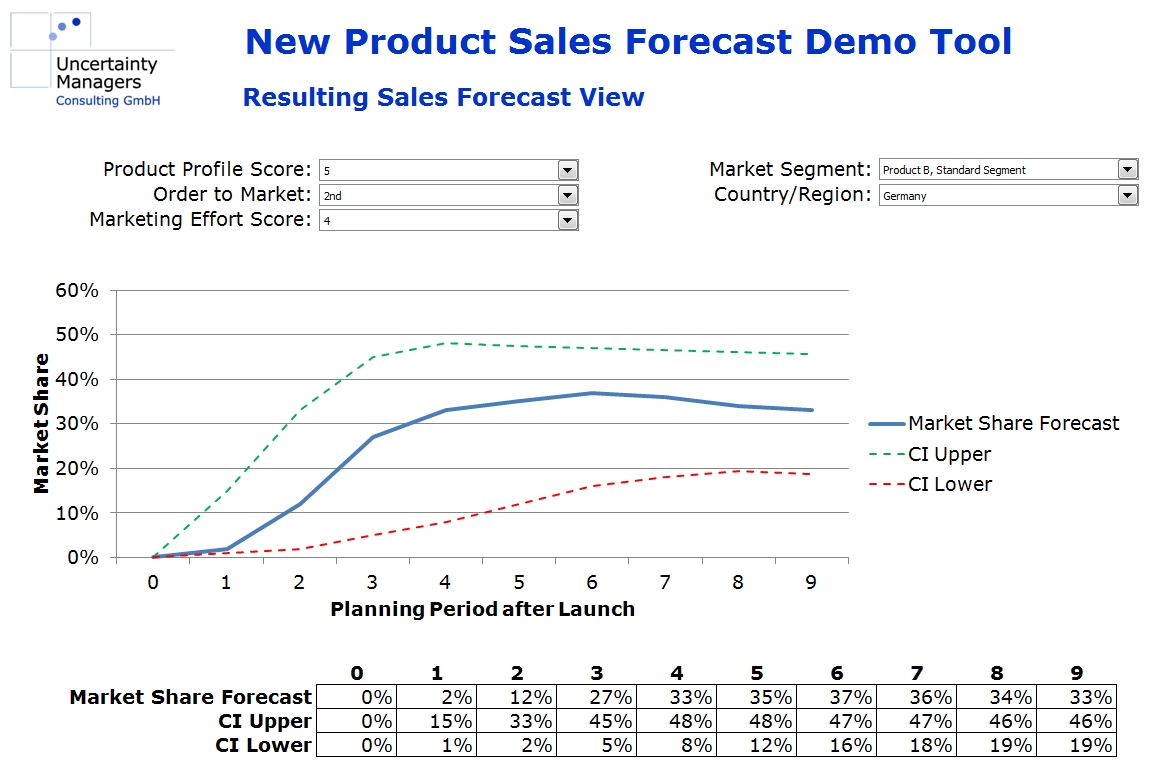

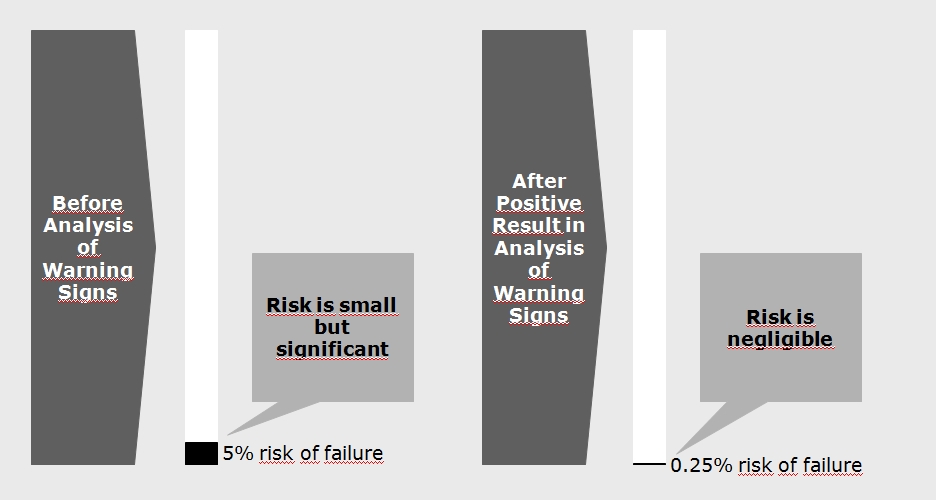

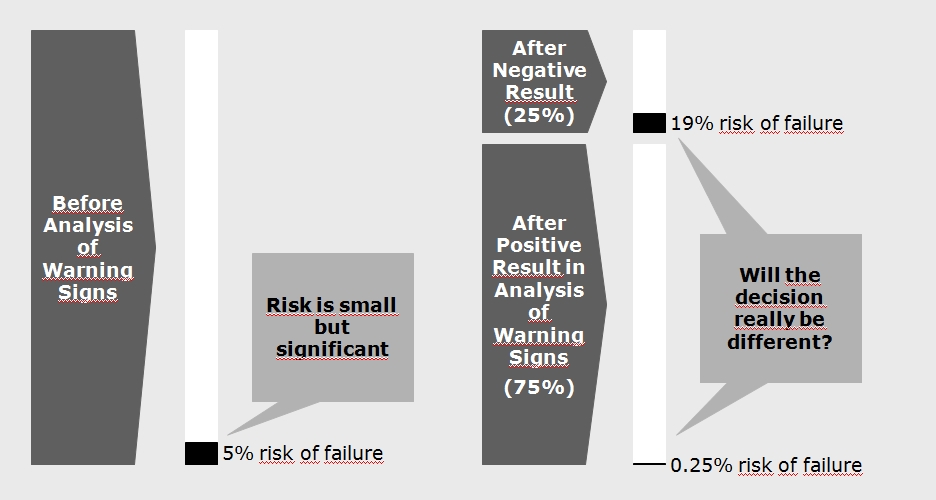

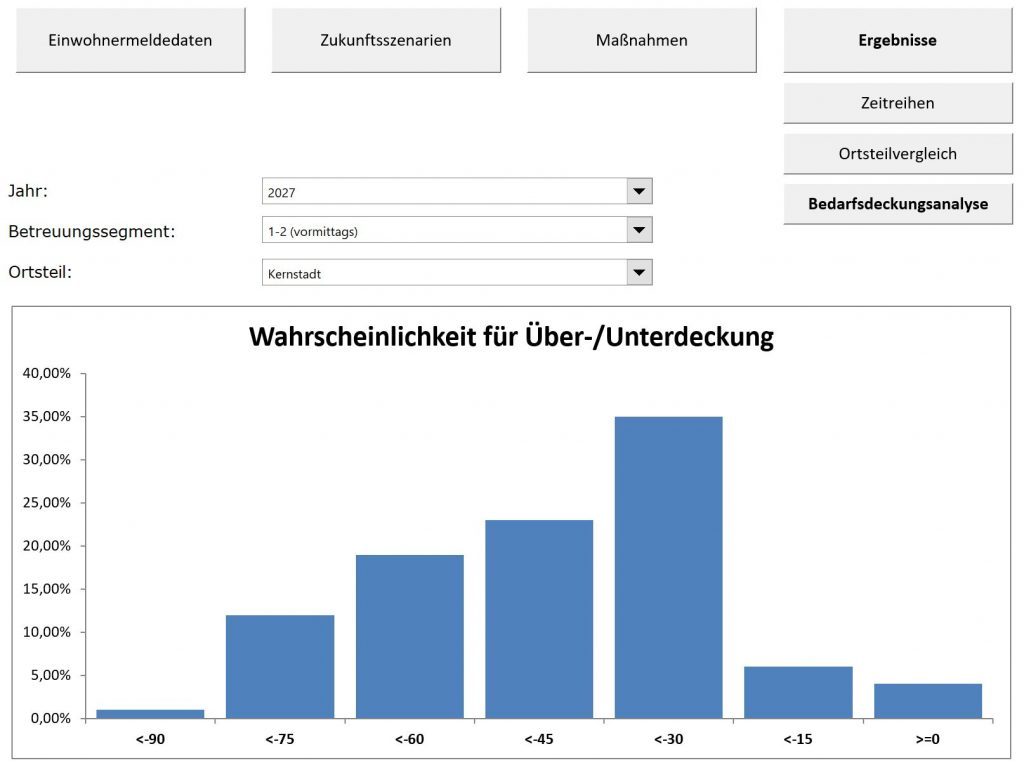

Schätzt man für die einzelnen den Szenarien zugrunde liegenden Parameter Streubreiten mit entsprechenden Eintrittswahrscheinlichkeiten ab, so ermöglicht dies eine statistische Analyse resultierender Risiken. Dies kann zum Beispiel auf Basis der Monte-Carlo-Simulation erfolgen, bei der über viele Durchläufe immer wieder Zufallszahlen im Rahmen der angenommenen Streubreiten generiert und die Ergebnisse für das Gesamtsystem erfasst werden. Dies ermöglicht Antworten auf Fragen wie: Mit welcher Wahrscheinlichkeit werden die heutigen Hortplätze trotz zusätzlicher Ganztagsschulklassen noch hinreichend ausgelastet? Wie viele neue Kleinkindbetreuungsplätze sind notwendig, um im Jahr 2022 mit einer Wahrscheinlichkeit von 95 Prozent lange Wartelisten zu vermeiden?

Modelle dieser Art sind nicht neu – in Großunternehmen sind sie zum Teil seit langem in Gebrauch, obwohl die benötigten Daten und quantitativen Abschätzungen aufgrund von Marktunsicherheiten dort oft weitaus schwerer zu generieren und deutlich ungenauer sind. Im öffentlichen Sektor sind ähnliche Ansätze zum Beispiel in der Bedarfsplanung für Verkehrswege etabliert. Mit ihrer stetig wachsenden gesellschaftlichen Bedeutung hat die Kinderbetreuung einen vergleichbar professionellen Blick in die Zukunft verdient.