In his new book: Ego. Das Spiel des Lebens (The Game of Life), Frank Schirrmacher, famous German columnist and editor of Frankfurter Allgemeine Zeitung, attributes both the collapse of communism and the behavior of humans in modern capitalism to a combination of game theory and advanced computing. According to Schirrmacher, game theory has turned humans into completely rational egoists, running the entire economy in IT-controlled financial markets.

Whereas Ego is quite obviously meant to be an entertaining sensation story rather than a textbook, no business school lecture or book on strategic planning would be complete without a chapter on game theory. Already in their 1944 classic Theory of Games and Economic Behavior, the developers of game theory, John von Neumann and economist Oskar Morgenstern, pointed out the concept’s applicability to corporate strategic decisions.

To estimate the impact game theory can actually have in corporate strategy (to be discussed in part 2 of this article) or in the steering of whole economies, let us take a very brief look at what game theory actually does. Here is hardly the place for a complete introduction to rather large field of game theory. Therefore, we will simply recall some important aspects needed to outline the capabilities and limitations of the concept. To refresh your knowledge in more depth, there is a multitude of resources on the web, from articles or videos to presentations or whole books. The Wikipedia article also is a good starting point on various types and applications of game theory for readers with some memory of the basics.

Game theory models a decision in the form of a game with clearly defined rules, which can be mathematically modeled. The games consist of a specified number of players (typically two players in the games cited as examples) who have to make decisions (typically just one per game), and there are predefined payoffs for each player, which depend on the decisions of all players combined. Two-player games with only one decision can be described in the form of a payoff matrix, in which columns describe the options of one player, rows the options of the other. Each matrix field contains payoffs for each player. Game theory then derives each player’s decision leading to the highest payoffs. Commonly cited examples of games are the prisoner’s dilemma, the chicken game or battle of the sexes.

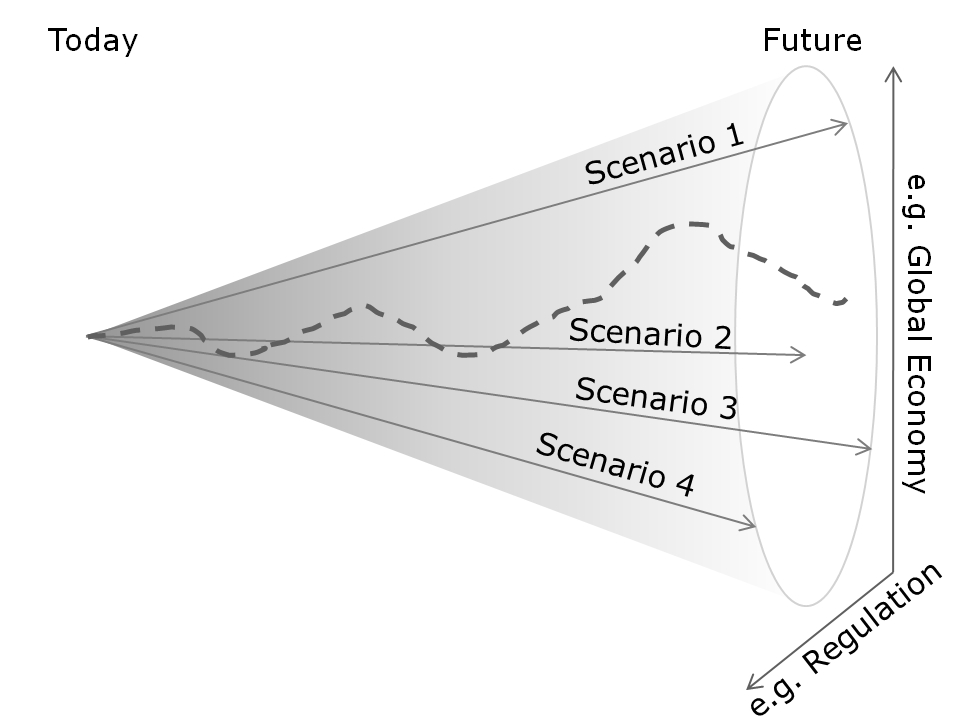

Various types of complexity can be added to such a game. There can be more players who may or may not have previous knowledge of the other’s decisions. Players can have the aim of cooperating and achieving the highest total payoff, or they can compete and even try to harm each other. There can be different consecutive or simultaneous decisions to make, and the game can be played once or repeatedly. Payoff chances can be the same for each player or different; they can be partially unknown or depend on probability. For games with several rounds, complex strategies can be derived. If a strategy only specifies probabilities for each option, while the actual decisions are made randomly according to these probabilities, it is called a mixed strategy. With the complexity of the game, analytically optimizing strategies becomes increasingly difficult.

Based on these principles, can game theory really deliver what is attributed to it? How powerful is this tool? Starting with Schirrmacher’s book, can game theory be the decision machine for a whole economy he describes?

First of all, Schirrmacher ascribes the economic collapse of the Soviet Union and the whole communist block to the superior use of game theory by the US. That would, however, mean that the Soviet Union’s dwindling economic strength should have been caused by at least some kind of influence from a methodically acting outside competitor. In fact, the omnipresent problems of socialist economies around the world – misallocation of resources, inefficiency, lack of motivation, corruption and nepotism – come from within the system. Trade restrictions were limited to goods with a potential military significance, and at least the East German economy was even kept alive with credits from the West.The only factor in which American influence really massively impacted the Soviet economy was the excessive transfer of resources to the military sector in the nuclear arms race. But did the United States really need intricate decision models to try to stay ahead technologically while maintaining at least a somewhat similar number of weapons as the potential enemy? Obviously not. Did it take game theory to understand the Soviet concept of outnumbering any opponent’s weapons by roughly a factor of three? That was simply the Red Army’s success formula from World War II and easily observable from the 1950s onward. Game theory has to quantify outcomes as payoffs, often in the form of money, at least in terms of utility. Can such a model help to predict the secret decision processes, more often than not driven by personal motives, in the inner circles of the Soviet leadership? Does it contribute anything more valuable than the output of classical political and military intelligence? There is a reason why the military was much more interested in game theory as a tool for battlefield tactics than in terms of global strategy.

So if game theory contributed little or nothing to the end of communism, how about Schirrmacher’s second hypothesis? Has game theory turned our decision makers into greedy rational egoists ignoring all social responsibility? Indeed, game theory works for decisions to be made based on the payoff matrix, and in the simplest form, the payoffs just correspond to profits. Commentators point out that game theory can lead to cooperative as well as competitive strategies, but cooperative strategies will also be aiming at maximizing individual or shared payoffs.

The actual point is that game theory in no way implies that a decision maker must or even should aim to maximize profits (although if the decision maker is a manager paid by his company’s shareholders waiting for their dividends, there are good arguments that he should, with or without game theory). Game theory attempts to show to a decision maker which strategy should lead to maximizing an abstract payoff. That payoff may be profit, or it may result from any other utility function. For military officers, the payoff may for example correspond to minimizing the loss of own casualties or to the number of civilians evacuated from a danger zone. For a sales manager, it may be the number of products sold or customer satisfaction.

Even if game theory derives a stategy as leading to the maximum payoff, it still does not mean that the decision maker has to follow that strategy. For example, even if the payoff is identical to profit, game theory can for example be used to estimate how much short term profit must be sacrificed to follow a more socially accepted strategy.

In short, game theory is simply one of many decision support tools available to managers and as firmly or loosely linked to profit maximization as any other of these tools.

Where game theory, however, always relies on maximization of the assumed (monetary or other) payoff is to guess the probable decisions to be made by other parties involved, be they competitors or cooperation partners. Without further information on the other parties’ intentions, game theory has to assume they will maximize payoffs – otherwise there will be no basis for any calculation. In a situation where everyone uses game theory, maximizing payoffs should therefore even help the competitors because it makes one’s actions predictable. What that implies for the applicabilitiy of game theory in actual strategic planning will be discussed in part 2 of this article.

Dr. Holm Gero Hümmler

Uncertainty Managers Consulting GmbH